Generating images and more with Generative Adversarial Networks

GAN-generated images of volcanoes from Nguyen et al, paper

A great deal of machine learning progress in recent years has been based on the training of neural networks using supervised learning. For example, if you feed the right kind of neural network a large collection of pictures and tell it which ones show cats and which ones don’t, it can eventually learn to discriminate on its own which new unlabeled pictures are cats and which ones are not (or, in another example, whether a picture shows a hot dog).

There is only so much labeled data out there. This has made unsupervised learning, or the training of neural networks with unlabeled data, a much hotter area of research lately. In 2014, researchers at the University of Montreal had a great idea for where to get new data: from another neural network.

They called this arrangement Generative Adversarial Networks, or GANs. A GAN can be thought of as a pair of competing neural networks: a generator G and a discriminator D. The generator takes as input random noise sampled from some distribution and attempts to generate new data intended to resemble real data. The discriminator network tries to discern real data from generated data. As the discriminator network improves its ability to correctly classify the data, metadata is sent back (or “back-propagated”) to the generator network to help it do a better job of trying to fool the discriminator network. Deep learning pioneer and Facebook AI director Yann Lecun called GANs “the coolest idea in deep learning in the last 20 years.”

Because the lead author of the original University of Montreal paper went on to become a staff researcher at Google Brain, Wired Magazine described the operation of GANs as Google’s Dueling Neural Networks Spar to Get Smarter, No Humans Required. Despite their bombastic claim, humans are very much required to perform careful configuration and tuning of these networks; for example, if the discriminator does its job too well, the generator wouldn’t learn anything as it iterates through data, and vice versa.

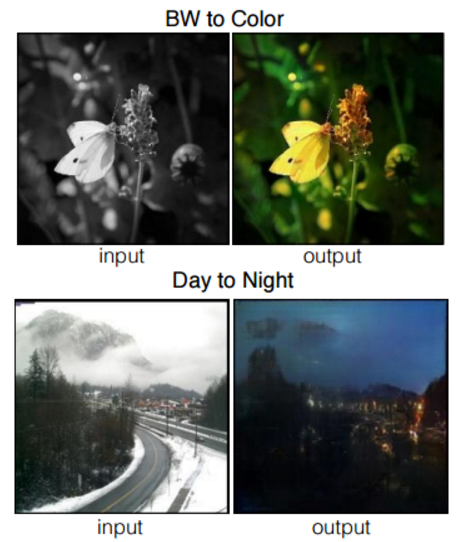

Other humans have gone on to create variations on GANs that perform new classes of tasks. For example, Conditional GANs feed additional information to both a generator and its discriminator partner to impose conditions on the generated image. In the following examples provided with the Image-to-Image Translation with Conditional Adversarial Networks paper written by researchers at the Berkeley AI Research Laboratory, the generator created a color version of one existing image and a night view of a daytime image:

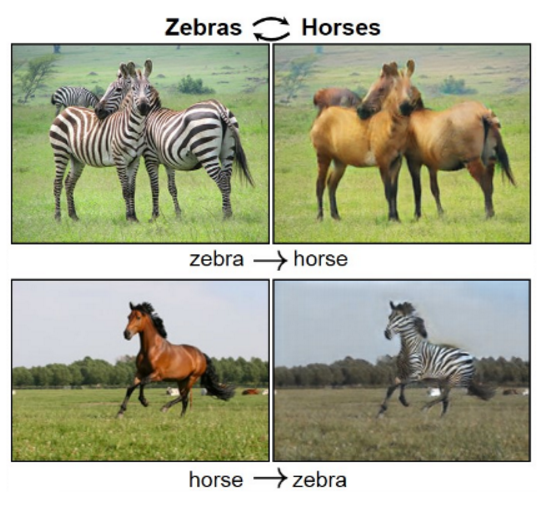

Some of the same Berkeley researchers also developed Cycle GANs, in which such translations can happen in both directions—for example, converting a horse picture to look like a zebra and vice versa:

Researchers at GA-CCRi have been exploring several ways to apply GANs for our customers:

- Ways to use conditional cycle GANs to map between visual images and infrared images

- Improving the resolution of overhead imagery

- Labeling text with semantic tags

- Generating text that belongs to specific semantic categories

The last two categories are especially interesting because most discussions of GANs revolve around generated images, but these techniques can be applied to other kinds of data as well.

GANs offer a lot of interesting possibilities!